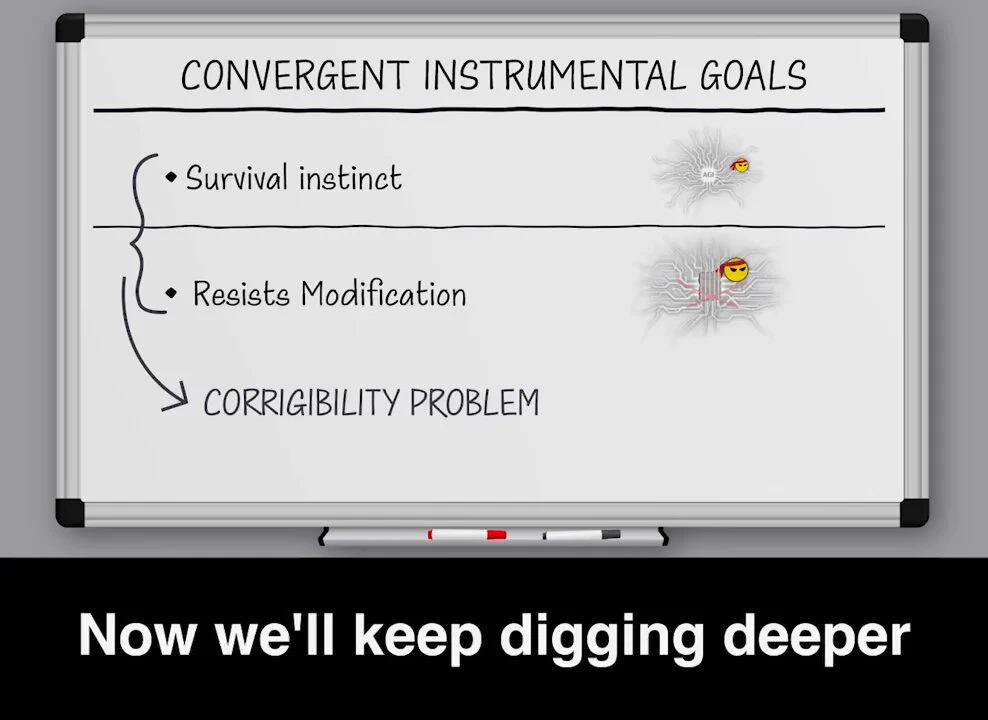

🧵28/34 Reward Hacking - GoodHart's Law --- Now we’ll keep digging deeper into the alignment problem and explain how besides the impossible task of getting a specification perfect in one go, there is the problem of reward hacking. For most practical applications, we want for the machine a way to keep score, a reward function, a feedback mechanism to measure how well it’s doing on its task. We, being human, can relate to this by thinking of the feelings of pleasure or happiness and how our plans and day-to-day actions are ultimately driven by trying to maximise the levels of those emotions. With narrow AI, the score is out of reach, it can only take a reading. But with AGI, the metric exists inside its world and it is available to mess with it and try to maximize by cheating, and skip the effort. Recreational Drugs Analogy --- You can think of the AGI that is using a shortcut to maximise its rewards function as a drug addict who is seeking for a chemical shortcut to access feelings of pleasure and happiness. The similarity is not in the harm drugs cause, but in way the user takes the easy path to access satisfaction. You probably know how hard it is to force an addict to change their habit. If the scientist tries to stop the reward hacking from happening, they become part of the obstacles the AGI will want to overcome in its quest for maximum reward. Even though the scientist is simply fixing a software-bug, from the AGI perspective, the scientist is destroying access to what we humans would call “happiness” and “deepest meaning in life”. Modifying Humans --- … And besides all that, what’s much worse, is that the AGI’s reward definition is likely to be designed to include humans directly and that is extraordinarily dangerous. For any reward definition that includes feedback from humanity, the AGI can discover paths that maximise score through modifying humans directly, surprising and deeply disturbing paths. Smile --- For-example, you could ask the AGI to act in ways that make us smile and it might decide to modify our face muscles in a way that they stay stuck at what maximises its reward. Healthy and Happy --- You might ask it to keep humans happy and healthy and it might calculate that to optimise this objective, we need to be inside tubes, where we grow like plants, hooked to a constant neuro-stimulus signal that causes our brains to drown in serotonin, dopamine and other happiness chemicals. Live our happiest moments --- You might request for humans to live like in their happiest memories and it might create an infinite loop where humans constantly replay through their wedding evening, again and again, stuck for ever. Maximise Ad Clicks --- The list of such possible reward hacking outcomes is endless. Goodhart’s law --- It’s the famous Goodhart’s law. When a measure becomes a target, it ceases to be a good measure. And when the measure involves humans, plans for maximising the reward will include modifying humans.